Knowing where you want to go is not the same as getting there, and other mathematical topics presented in plain English.

The Nash Equilibrium is a favorite concept of poker players, economists and computer scientists. At the moment the crypto community is indulging in the study of algorithmic game theory with zeal. The most common motivation is the design and analysis of “crypto-economic” protocols. I prefer to think of these as “coordination protocols” as at shifts the attention to the shared goal the protocol is meant to achieve rather than invoke the misplaced assumption that the games in question are zero sum in nature.

Nash is a plenty reasonable place to start as a Nash Equilibrium is characterized by a game and set of strategies by all players for which this is no unilateral incentive to deviate. Nash proved that in case of N player games there is always such an equilibrium, as long as we allow for mixed strategies. A Nash Equilibrium is special case of a fixed point, defined for the formal mathematical objects, we call games. As the games themselves become more complex, say with more players, asymmetries or more complex dynamics defining the interrelation of the payouts for players, an existence proof for a fixed point becomes a lot less useful.

For starters, existence and uniqueness are different mathematical properties of fixed points. Discovering, defining or deriving a Nash Equilibrium for a particular game does not rule out the existence of other Nash equilibria. Some games can be proven to have no pure Nash Equilibria, meaning that mixed or randomized strategies are required — for example rock paper scissors. The Nash equilibrium is each player choosing one of the three with equal probability.

Introducing mixed strategies is a blessing and a curse. By introducing the ability to employ strategies with entropy, the underlying best response optimization problem is relaxed to a continuous domain from a potentially intractable combinatorial problem. Such relaxations are known for making hard problems tractable. However, for arbitrary reward functions, this may quickly result in a large number of equilibria, effectively local optima.

When facing a problem where the goal of designing the game is to create a coordinated outcome, it is prudent to characterize a global reward or social utility function encoding the objective of the game. In a well-designed game there will clear mathematical relationships between the local agents reward functions and the global objective. More on that later, first let us define a tool for assessing a particular equilibrium.

The price of anarchy compares the social utility of a Nash Equilibrium to the social utility of the strategy profile determined by centrally maximizing over all players strategy space as if one omniscient player was playing every agent. These bounding type results help benchmark how good a particular game is at eliciting coordination around a particular shared objective.

The reference examples for games with high price of anarchy is the Prisoners Dilemma for two players and the tragedy of the commons for many players. In each of these cases, the locally optimal strategy profiles for individual agents results in a stunningly unfavorable outcome with respect to not only the central optimal solution in fact leaves all parties strictly worse off in their respective local reward functions as well.

This brings us to the discussion what global social utilities should be. These examples demonstrate that simply defining the social utility as the sum of the individual reward functions does not necessarily lead to desirable outcomes. In fact, it may be the very independence of the local reward functions that is the culprit.

In other coordination problems such as flocking, it is the coupling of the objective functions that drives the system to a coordinate optimal. A review of research into bio-inspired multi-agent coordination finds the emergence of coordination under consensus processes. Unlike the consensus algorithms discussed for resolving the byzantine general’s problem which is a combinatorial problem, these consensus processes involve dynamical systems, and coordination in a continuously valued state space. There are fundamentally averaging algorithms, and capable of producing shockingly robust convergence to coordinated equilibria.

Note that traditionally, game theory does not come equipped with tools for convergence, despite dynamics being a key element in the theory of fixed points. In the case of game theory, the implied process is the game itself, or more precisely the joint process of every player seeking an optimal best response to each other. In a one-shot game, which is the basic construction, this search occurs entirely in the minds of the player, attempting to recursively estimate the actions of all others with respect to their own. The naïve approach is to simply use the Schelling point but the other extreme is an intractable infinite recursion. Fortunately, real life implementations of game theoretic structures are rarely one shot, and repetition changes the nature of the problem. Ensembling and temporal dynamics produce radically different risk profiles and thus decision making paradigms, as frequently pointed out by N.N. Taleb.

In order to introduce the notion of dynamics one moves to repeated games, where strategies are characterized as state dependent or even historically dependent functions. A complete historical trajectory includes the history of all states and actions produced while repeatedly playing the game with a set of other agents. Practically speaking, decision making agents have some input-output policy with bounded input space but the set of all such policies should have few if any other assumptions associated with it formally; what is a rational objective, ironically, is a subjective claim.

The math gets hard fast and best responses quickly become intractable and even good solutions are computationally intensive involving methods like dynamic programming. We won’t go too far down this path; after all if an equilibrium is too hard to compute or as the case here, requires a high abstracted functional optimization in discounted time, an open economy cannot realistically be expected to discover it naturally.

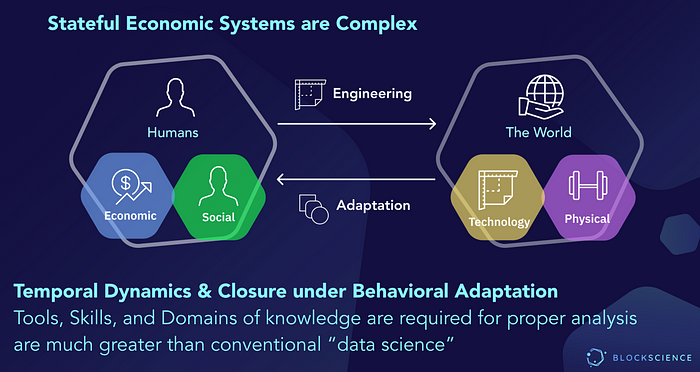

Instead, let’s focus on the simplicity of our bio-inspired coordination and the importance of introducing the notion of dynamics. Since much of the blockchain literature is born of economics and computer science, it is also not naturally equipped with a notion of dynamics. It is not a coincidence, earlier this year I published a paper which build that very construct for blockchain-enabled economies. Having established a foundation for dynamics, I can confidently proceed with a discussion of another class of games which are much more practical for engineers.

Potential games are games where the objectives can be thought of potential fields. For the purpose of aligning with literature on convex optimization, we will think of maximizing our reward instead as minimizing a penalty (which is precisely the negative of the reward function).

In such a potential game, the minima in the potential field are the Nash Equilibria. Furthermore, in repeated play of the same game, it is possible to view the dynamics of the evolving strategies and agents’ actions as realizations of stochastic gradient descent type algorithms. If every local agent makes a move individually ‘downhill’, then this is a step ‘downhill’ in the global objective as long as the gradient of the global objective has negative inner product with the system-wide update vector defined by all those individual actions. This may sound complicated, but it’s just the definition of a descent type algorithm.

This result tells us we’ll discover a local minimum, which is itself one of potentially many Nash Equilibrium, in the general case. However, as the designer of said game, one can do much better by meeting the requirement that the global potential function be convex. This resolves a critical discussion from earlier, uniqueness. A descent type algorithm, will iteratively converge to a unique global minimum of a convex function by naively descending its gradient.

We still have one problem though, I am talking about the global potential function. We are designing local incentives not global ones. This one place where a little math is necessary. How do we connect our global and local functions? It turns out that there are a lot great results in the literature on convex analysis regarding convex functions. Some useful ones are sums of convex functions, quadratic forms with any positive semidefinite matrix, supremum over any set of convex functions and most common regularization functions. No need to worry about these details now, because you can look them up when you need them. The important point is that there are plenty of ways to create mathematically properly aligned local and global convex utility functions, once you know what coordination behavior you are designing for.

In this view of coordination protocol design, there is not actually an optimization algorithm other that the agents themselves, so the primary results of interest are convergence and stability results around the Nash Equilibrium which is a unique global minimum, without need to characterize the agents’ behavior beyond decrease in the potential function in expectation. This type of stochastic process is called a super-martingale, which actually allows for each agent to move against own interests quite frequently without breaking convergence properties.

For economic systems, I am particularly fond of this construction because it makes for a very weak notion of rationally: agents need not play best response, they merely need to descend in expectation, which permits quite a bit of irrationality or even transient antagonism. Since these potential functions are largely financial in nature, one can rather reasonably assume that perpetual trying to climb the potential function, effectively working against the coordination protocol will always cost you money. Without an infinite supply of funds, one will eventually fall back down the potential well just to stay solvent.

As a benefit of introducing the concept of dynamical systems, there is a much more complete theory associated with stability around fixed points. The result can go from the extremely abstract such as Brouwer Fixed point theorem to the practical results on super-martingale convergence of stochastic processes. In the particular case of blockchain economies, a realistic angle would be convergence to a neighborhood of the equilibrium and a proof that event where the state leaves that neighborhood has measure zero, which makes its probability infinitesimal. I’ve written proofs of this nature for decentralized algorithms before. While they can be a lot effort formally, the informal proof is quite intuitive. You can imagine water running down hill and converging at the lowest point; even if there is some turbulence in the resulting pool, it still more or less stays in the low point.

This more or less covers the topics necessary to turn a network of agents under a single mechanism with a single goal into an evolutionary optimization problem, where the genetic process is unknown. This approach still fundamentally relies on the incentive structures and the assumption that the incentives of the agents actually map to the local utilities that the mechanism was designed from.

In practice, there are often externalities that cause agents to prefer actions other than those a model assumes. One of the main benefits of blockchain in economic networks with externalities is that the costs of those externalities can modeled into the incentives and be real costs may be levied on the agents to avoid coordination failures as in the case of the tragedy of the commons.

During this discussion we’ve made every effort to find the weakest assumptions on agent behavior from which we can realistically expect to converge to the neighborhood of known equilibrium. There is a next level concept that I consider to be as important, if not more so: configuration spaces or reachable spaces. These topics also occur in reinforcement learning related to agents who must learn about their environment from interacting with it.

When a system is defined by the way it can change as in the case in blockchain networks for which there must be explicit rules defining all legal transactions, the current state and the set of all legal actions are sufficient to characterize all states that might be reached in the future. More importantly, those sets of legal actions are being designed as part of economic system design process.

These are the action spaces define all possible behaviors of agents irrespective of motivation or incentives, and the resulting configuration spaces define all possible future states. It is informative to review these concepts in the context of other high level goals such as motion planning.

Suppose you had a tool to design these legal action sets to ensure your system goals were captured in the configuration space itself, rather than as a byproduct of some particular behavioral model. One could basically ignore all models of agent behavior except to assume that the blockchain ensure all actions taken are allowed. Conveniently, that is what the consensus algorithms in a blockchain network do: irrespective of agents attempts otherwise only valid transaction actually occur.

We actually have that tool and we’re already using it; it is called an invariant in both computer science and dynamical systems. An invariant is any function of the state space which remains unchanged under a legal action. Building on this, we can consider any scalar functions of the statespace and examine how they evolve under all of the legal actions. In my paper, we explore mathematically how the sum of all bitcoin remains equal to the sum of all mining rewards despite an infinitely expanding number of unique accounts. The sum of all bitcoin itself is a scalar function of the state.

Things get very interesting when we examine more general scalar functions of the system state, which I call value functions. Value functions properly paired with legal action states can drive the systems configuration space to a desirable subspace in a manner provable along the lines of the Brouwer fixed point theorem mentioned earlier.

This is a very non-intuitive approach because we are not actually discussing what agents do or what state the system is in at all, but rather making topological arguments regarding the spaces of actions and the achievable state spaces given any sequence of those actions. Similar abstract methods are applied to formally prove convergence of control algorithms under high degrees of uncertainty, using non-negative scalar functions of the state called Lyapunov functions. One can think of these functions like augmenting the existing energy fields like gravity with additional fields imbued through incentives or other mathematical constructs that essentially modify the shape of space, well actually, cyberspace.

Lyapunov methods demonstrate properties of a system examining how scalar functions encoding the desired properties evolve under all legal state changes, which in the controls field are defined by the composition of a system plant and a controller engineered to modify that systems closed loop behavior. An all too common misconception about control theory is that one has a lot of control; in fact, controllers are from omniscient and omnipotent. The entire field revolves around what one can accomplish with limited degrees of controllability and observability. Due to this fact, most controls work is sufficiently indistinguishable from optimization and task specific AI that it no longer gets the attention as a field that it merits.

While blockchain systems differ intuitively from control systems, they have deep mathematical similarities. Notably their systematic decomposition between internal dynamics and inputs which is most evident the linear state space model representation.

Having established invariants as scalar functions that remain unchanged, one can extend this notion to any functions with provable desirable properties under a set of legal transactions. A convergence or tracking property, to a desirable subspace would be encoded using a construct like a Lyapunov function, which to tie back to earlier discussions, is best understood as a generalized potential function. The informal proofs for Lyapunov convergence and stability look very much the same as our arguments for potential games and convex function but abstracted to functions and spaces. Slightly more concrete examples can be found in queuing theory for networks. My most recent work in that area can be found here.

Since entering the Blockchain research community, I’ve resumed my work and began to establish an area of research at the boundary of optimization theory, dynamical systems and game theory which I claim is of paramount interest to the blockchain community is it provides the most general guarantees on system behavior possible.

On a semantic note, I feel it is only proper that the field of cryptography be expanded to account for economics in a manner that proves facts about “that which can and cannot happen”, rather than merely “what is or is not likely under some behavioral assumptions.” The preliminary framework for this general approach is presented here (third time is the charm). Remember, all models are wrong, but some are useful; I hope you find mine useful.