How can algorithm design be reconceptualized as policy-making to create safer digital infrastructures?

Michael Zargham & Kelsie Nabben

In a digitised society, algorithms are fulfilling the function of policies as they constitute the rules of governance in online platforms, yet, they are not being designed with the adequate considerations for those being governed.

Software ‘code’ is the rules for a computer to follow. Yet, it is increasingly common to also refer to “rules as code”- in the sense that code is defining rules through which humans interact with each other. This development is not merely theoretical, as algorithms make decisions about social media posts, credit ratings, the routes to follow when driving and more.

At first glance, it might seem as simple as humans defining rules for computers and computers defining rules for humans. However, deeper consideration exposes significant inequality between those making rules via code, and those bound by the coded rules. For example, workers whose livelihoods are dictated by platform algorithms are said to be “below the API”.

Thus, it becomes clear that the definition of ‘code’ equates to the traditional function of the law as ‘the rules by which humans interact with one another’. Therefore, it is incumbent upon software engineers to consider algorithm design work with the awareness that should be associated with policy-making related to the burden of responsibility to the ‘public good’.

To formalise this concept of ‘algorithms as policy’, it is useful to approach digital infrastructure as critical, public infrastructure. Through this lens, it is possible to explore the social consequences of algorithmic policy-making, including lack of accountability, subjectivity, bias, and “real-world” consequences. We argue that it is only through the recontextualization of algorithmic design as a modern form of policy-making that we can surface subjective assumptions, enhance accountability, and enable feedback processes required for communities to thrive in the information age.

Coding Reality

We are living in a digital society. Our transactions and interactions are dependent on digital infrastructure. In this society, policy-making has become a duality: with nation-state-based government policy in the physical world operating in parallel to the rules of virtual platforms in cyberspace.

The borders between cyberspace and the physical world have become porous, meaning that online phenomena manifest in society in the physical reality (or ‘meatspace’). Examples include COVID-19 conspiracy protests, government responses to Twitter misinformation warnings, and memetic warfare related to the BLM protests. But who’s reality is this?

Digital infrastructure is comprised of finite sequences of well-defined computer-implementable instructions, known as algorithms. While mathematical models that underpin these algorithms may be highly effective in representing social phenomena they are imperfect reductions of our experienced reality.

What are “Algorithmic Policies”?

Technology platforms provide patterns for human interactions. The experience of the users is extremely sensitive to the algorithms embedded in those platforms, at both a local and systemic level. These algorithms are tantamount to policies. As such, their design and maintenance is functionally equivalent to governance.

The problem with performing governance functions through algorithms in society is that defining the goals of algorithmic sequences of instructions is a subjective practice. If markets are the invisible hand of capitalism, then algorithms are the invisible hand of digital systems.

Algorithms are performative, in that they simultaneously enable and limit actions on the part of a platform’s users, inducing direct effects on the social institutions those platforms intermediate.

Furthermore, proprietary technology platforms and algorithms are opaque in design, function, goals and governance; leading to a perversion of the social institutions they mediate. This phenomena of private governance towards commercial ends is known as ‘platform determinism’.

With little accountability, our ‘algorithmic dependence’ is resulting in the breakdown of democratic institutions, access to information, and trust in society.

It is only through the recontextualization of algorithmic design as a modern form of policy-making, that we can surface subjective assumptions and enhance accountability and feedback to enable communities to thrive in the information age.

Algorithms as Governance

Algorithms in digital platforms must be explicit in the minds of designers and users to emphasize how digital systems design carries the same significance as policy-making and governance processes in shaping societal realities. When algorithms are perceived as governance in digital infrastructures, it is then necessary to acknowledge both the socio-political and the technical elements of system engineering.

Infrastructure is co-produced through an “interplay of epistemic and political processes” between social and technological and social orders. The processes surrounding infrastructure of funding, creation, governance and maintenance are also inherently political.

The concept of ‘governmentality’ looks at how power is exercised through a network of institutions, practices, procedures and techniques. Peeling back components of digital infrastructure to the level of human biology and the political reveals the messy social processes and politics of a socio-technical system. Thus, the outcome can often reveal more about governance theory and practices, which need to be considered, than the algorithm itself.

Approaching the design of technical infrastructure layers as a governance design problem encourages an awareness of the system designers’ goals, as well as a requirement to consider the ‘public good’. This surfaces the need for both engineering and governance mechanisms, including; feedback loops, accountability and recourse mechanisms, the role and responsibilities of participants in the system, and acknowledgement of digital infrastructure as a complex adaptive system.

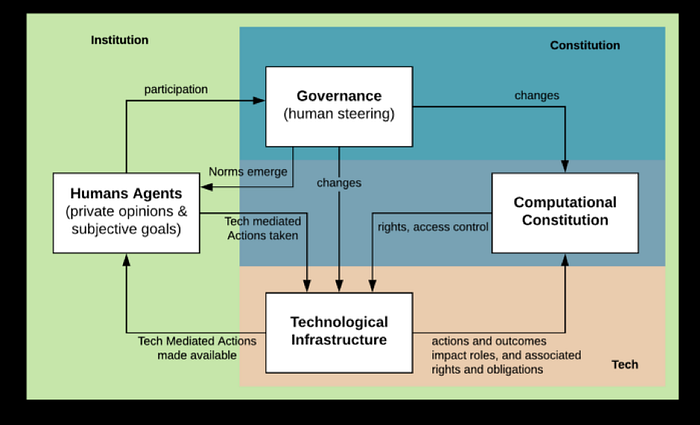

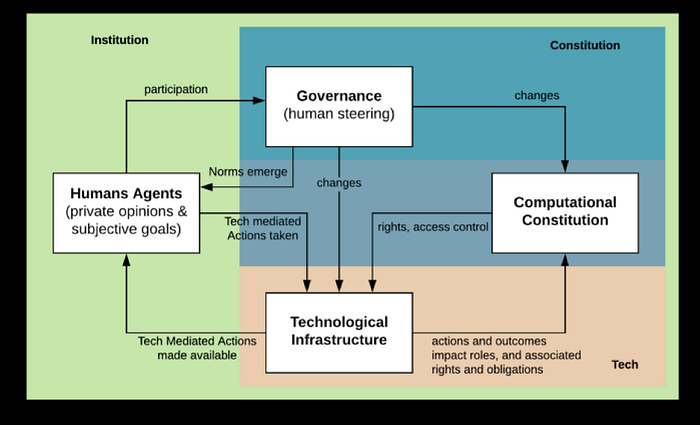

The design component of digital infrastructure is the relationship between ‘technological infrastructure’ and ‘human agents’. Here, the logic embedded in the code creates an option space for technology-mediated actions for people, and determines the feedback loops between components of the system.

Policy-making problems become coding problems

When viewed as policies, the problems with algorithmic subjectivity, lack of accountability, and lack of recourse in algorithmic policies become apparent.

An algorithmic policy is a kind of complete contract, synonymous to policy making in traditional settings. It takes an input and renders a provably deterministic output. Yet, there is no transparency by which to assess faithful execution, or fairness of the policy in relation to the ‘public good’.

Data and algorithms are not objective. As there is no objective guarantee of the relationship between ‘rules’ and outcomes, algorithmic policy-making is intersubjective. Policies enshrined in software have a powerful role in determining what information users are exposed to and what actions those users are able to take — from internet service providers, to online marketplaces, to social networks, search engines, and so on. Meanwhile, the programming decisions that designers make when coding these policies are subject to their cultural, historical and political backgrounds, and the opportunities and constraints of the incentives that drive their work.

This bias is reinforced when problems are framed as coding challenges, with objective solutions, rather than as policy making challenges, which require a socio-technical approach. This blindness to the subjective nature of policy making leads the platform owner to confidently justify their policy decisions, while failing to consider what the objectives of the policy-making are, or to engage with the real consequences their decisions have on the users.

For example, consider the subjectivity of the algorithm which governs how Google search engine data is ranked and presented, PageRank. This algorithm is responsible for the ordering of information and mediation of access to information on the web. PageRank is part of the rules, or computational constitution, of Google, which governs the way that people can interact with the internet.

Challenges to a functioning digital society emerge in that the entities responsible for this digital-social infrastructure are not legally beholden to the interests of the users, or the ‘public good’. On the contrary, they are often private stakeholders that have a fiduciary duty to shareholders. User attention is routed and their data is harvested.

While any sufficiently new technology may be considered a novelty item, once a technology becomes necessary for participation in society, it is civil infrastructure. Civil infrastructure, such as roads and bridges, the water system, and power grids are all regulated utilities. The physical layer of the internet is currently facing this transition towards accountability via regulation, such as Internet Service Provider (ISP) legislation. But what about accountability for the algorithmic, ‘application’ layer of the internet?

The algorithmic policies embedded in online platforms have serious consequences on our lives but with weak accountability feedback loops regarding their effects. Can one really know how an algorithm influences or discriminates against people? Like any question of policy, it is necessary to distinguish between the policy objective, the policy itself, and the faithful execution of that policy. Yet, without transparent feedback loops, accountability is lacking in algorithmically mediated systems. Even if a user agrees with a stated policy, there are no means to verify its faithful execution in black-box code or take recourse in existing asymmetries between people and platforms.

As social infrastructures, the systemic issues arising from the societal interventions of algorithmic policies through digital platforms causes second order effects. The effects of algorithmic policies also impact existing sense-making institutions. This was seen in the paradoxical manoeuvre to regulate social media platforms in the US, as well as reliance on them for public voice and influence. As with any infrastructure, these effects usually become apparent upon breakdown.

In sum

It is only through the recontextualization of algorithmic design as a modern form of policy-making that we can surface subjective assumptions and enhance accountability and feedback to enable communities to thrive in the information age.

Recontextualizing algorithmic design as a modern form of policy-making allows both designers and users to surface subjective assumptions and enhance accountability.

This allows us to explore algorithmic policy-making in various settings. This includes emerging software development communities, such as decentralised digital infrastructure, to investigate what it can teach us as an experimental grounds for testing the consequences of code. This is an essential exercise to evaluate engineering and governance concepts before attempting to scale machine-human integrated solutions.

Related topics which we intend to explore include: how do we ‘safely’ test algorithmic policies; what alternatives are there to centralised, deterministic platforms; and how do they allow people to participate in ownership, governance and decision-making to switch the role of people in the system from “users” to “participants”?

End.

Cite as: Zargham, M and Nabben, K., “Algorithms as Policy: “How can algorithm design be reconceptualized as policy-making to create safer digital infrastructures?”, 2020, <https://kelsienabben.substack.com/p/algorithms-as-policy>.

About BlockScience

BlockScience® is a complex systems engineering, R&D, and analytics firm. Our goal is to combine academic-grade research with advanced mathematical and computational engineering to design safe and resilient socio-technical systems. We provide engineering, design, and analytics services to a wide range of clients, including for-profit, non-profit, academic, and government organizations, and contribute to open-source research and software development.