Putting the Sybil Detection Machine Learning Algorithm to the Test

This article is an update to the Gitcoin community about the results of the Gitcoin DAO Anti-Fraud working group, particularly on the success of the sybil detection machine learning pipeline in catching fraud in Gitcoin Round 10.

Background

The Anti-Fraud working group of the Gitcoin DAO kicked off just prior to Gitcoin Round 10, stemming from an impressive collaboration between the Gitcoin team, BlockScience, and engaged Gitcoin stewards. The aim of this working group is to build policy and process towards defining, detecting, evaluating and sanctioning donation behavior that goes against Gitcoin Grants policy and terms of service. The working group is in the process of progressive decentralization towards management by empowered community stewards, and this round was the first time engaged Gitcoin community members were able to get involved.

One component of the working group is the sybil detection machine learning (ML) algorithm, which is headed up by the BlockScience team, with a dedicated team of stewards feeding human judgement into the process to give the ML algorithm a reference point when separating out sybil users from non-sybil. This post explores the results provided by this workstream for the wrap up of Gitcoin Round 10, which finished on July 1.

The Results

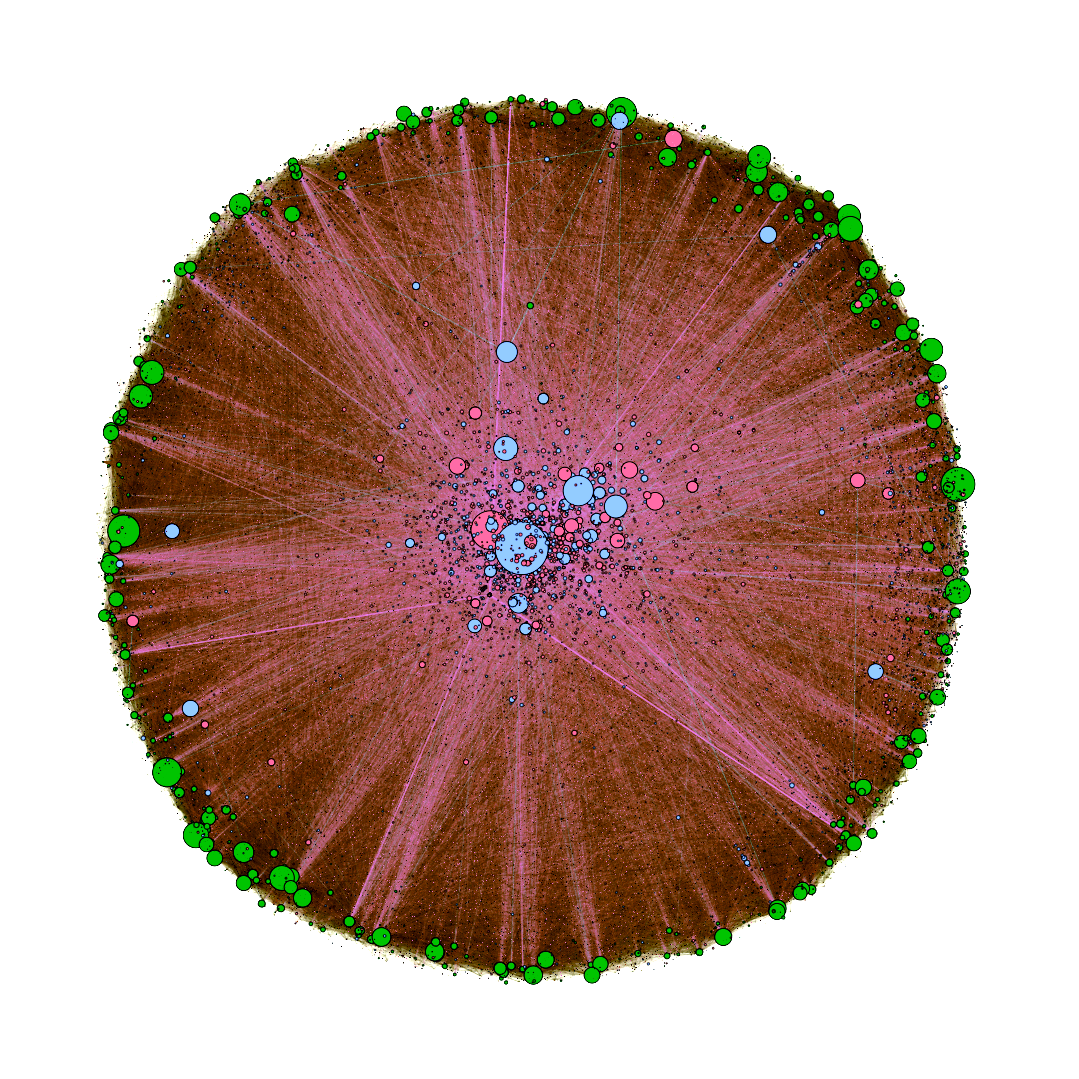

In this round, we experimented with how aggressive the algorithm needs to be to catch sybil (i.e. fake) donors. The sybil detection algorithm aggressiveness tunes the trade off between sensitivity and specificity of the flagging model. The sybil detection pipeline was running through Gitcoin Round 10, parameterized at a 20% aggressiveness score. When it is set to 50%, the algorithm will be optimized to maximize sybil detection accuracy — even if at the cost of wrongly flagging non-sybil users — while values closer to 0% means that it will be optimized for minimizing false detection rate, but could miss out on detecting some sybil users. An aggressiveness score of 20% means that users who scored between 0.8–1 on the ML algorithm (as seen in the image above) will be determined to be likely sybil, pending steward ratification of the results of the sybil detection pipeline.

To address the results of the anti-sybil algorithm, the term ‘fraud tax’ was introduced, which is formally defined here. In Round 9, Gitcoin community stewards ultimately decided to pay the ‘fraud tax’ and Kevin Owocki, Gitcoin’s CEO, wrote an excellent explanation of the details and reasoning for this process in this governance brief on Gitcoin Grants Round 9.

So how did Grants Round 10 stack up?

The fraud tax for Gitcoin Round 10 was $14,400, approximately 2.1% of the total matching funds of $700,000. In contrast, the fraud tax in Gitcoin Round 9 was $33,000, or a total of 6.6% of the total matching funds last round.

It is worth noting that the fraud tax is only one KPI in the Anti-Fraud workgroup of the GitcoinDAO. Several other work streams are in progress simultaneously, to verify that grants are real (and following Grants terms of service) when they enter the system, as well as establishing sanction and appeals processes to properly handle disputes in the flagging process. Procedures and precedent are being steadily iterated for these other aspects of fraud detection, to maintain Gitcoin Grants as a fair and credibly neutral funding platform for web3 public goods.

In Conclusion

The construction of the sybil detection algorithm and operations pipeline to support and enact its outputs has been an ongoing process for the past number of months. We are excited to see it in use, providing helpful results to scale the ability of the GitcoinDAO to detect and mitigate sybil attacks on the Grants ecosystem, which could otherwise threaten the credible neutrality of the Grants ecosystem. We have many iterations and improvements planned for the sybil detection pipeline between now and the start of Gitcoin Round 11.

To stay informed on developments and participate in the anti-fraud working group, join the conversation in the Anti-Fraud-Sybil channel in Discord, or keep up with the workstream on the forum: gov.gitcoin.co

About BlockScience

BlockScience® is a complex systems engineering, R&D, and analytics firm. Our goal is to combine academic-grade research with advanced mathematical and computational engineering to design safe and resilient socio-technical systems. We provide engineering, design, and analytics services to a wide range of clients, including for-profit, non-profit, academic, and government organizations, and contribute to open-source research and software development.