Tuning the Algorithm and Building Microservices

Since Gitcoin Round 7, the BlockScience team has been working with the Gitcoin team and community to examine the quadratic funding system for attack vectors, and figure out how to defend against them to maintain Gitcoin as a credibly neutral funding tool for the Ethereum public goods ecosystem. This article is an update to the Gitcoin community about the results of that work for use by the Gitcoin DAO Fraud Detection and Defense working group (FDD), and the results of the Sybil detection machine learning pipeline in Gitcoin Round 11.

Background

The Gitcoin ecosystem has experienced massive growth in its quadratic funding program for Ethereum public goods, more than doubling its match funding in one year — from $450k in Round 7, to $965k in Round 11. As the stakes get higher, the rewards for gaming the system become more attractive to potential Sybil attackers.

Previous work by BlockScience, the Token Engineering community, and Gitcoin stewards in earlier rounds aimed to deter adversarial behaviour at scale on Gitcoin Grants. These groups continue to explore how to attack and defend Quadratic Funding to improve the accuracy of Sybil and collusion detection, and protect the integrity of the Gitcoin grants system.

Current Work

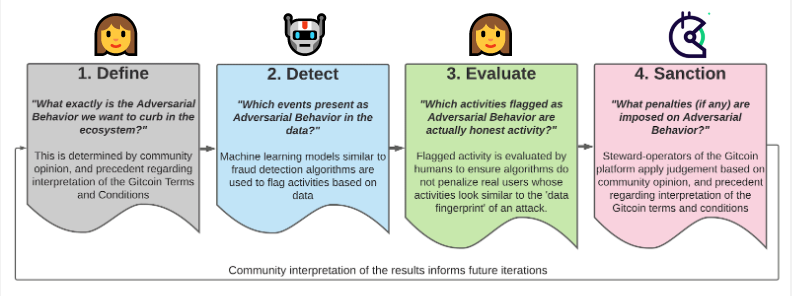

A key tool in the fraud defense toolkit is the Sybil detection machine learning (ML) algorithm, which is headed up by BlockScience with input from a dedicated team of Gitcoin stewards. Each round, the teams tune the algorithm, while human evaluators review the results and improve the machine-human feedback loop to improve fraud detection and appropriate policies. The flags provided by the humans serve as a statistical survey, allowing BlockScience engineers to estimate how many real Sybil users there are, which informs how far away the ML detection is from achieving parity with the attackers.

In the last round the algorithm was tuned to an aggressiveness level of 20%, while in this round, it was turned up to 30% — meaning a greater sensitivity, but could capture more false positives in flagging parameters that could indicate possible Sybil behaviors.

So, what did the data captured in Gitcoin Round 11 tell us? This post explores the results provided by this workstream for the wrap up of the funding round, which finished on September 23.

Round 11 Results

The entire FDD process, which consists of a symbiosis of Human Evaluations, ML Predictions and SME Heuristic Flags (set of human made conditions for generating flags), flagged 853 contributor accounts as potential Sybil out of 15,986 total contributors (5.3%). Based on statistical considerations that make use of the Human Evaluations as surveys, the best estimate for actual Sybil, is likely closest to 6.4%, with a range between 3.6% and 9.3%.

As an exercise only, if we treated the surveys as representative of the True Sybil Incidence, we could say that the FDD process is probably effective at catching about 83% of Sybil users on the Gitcoin platform. It is not possible to exclude the option that we’re catching all of the Sybil users, and it is highly unlikely that we’re catching less than 57% of them.

In addition to tuning the algorithm, fraud flag evaluation is also an area of continuing iteration. Determining whether flagged contributions are fraudulent or not is not an exact science. Due to the fact that some edge cases are unclear, Gitcoin stewards are continuing to consider different ways that this can be handled in allocating matching funds to these contributions. One of those considerations is the “Fraud Tax”.

The term Fraud Tax was introduced in prior rounds as a metric, which is formally defined here . Essentially, the Fraud Tax is determined by looking at two scenarios: CLR matching calculated as is, and CLR matching calculated with suspected Sybils removed. For each individual grant, evaluators take the better (maximum) of the two matching amounts. When you add up all of these best cases for all of the grants, the total will end up being more than the total CLR matching pool, and the net between the two is what is being called the Fraud Tax.

If the Fraud Tax is paid (subsidized by Gitcoin), it will ensure that no grant has a net loss in CLR matching due to the Sybil attacks, however it also means that potential Sybils succeed in benefiting their intended grants. In Round 9, Gitcoin community stewards ultimately decided to pay the Fraud Tax and Kevin Owocki, Gitcoin Holding’s CEO, wrote an excellent explanation of the details and reasoning for this process in this governance brief on Gitcoin Grants Round 9. Although Gitcoin stewards have previously decided to pay out the Fraud Tax from the Gitcoin community multisig, the decision for this round will again need to be evaluated and voted upon by the stewards. While in prior rounds this policy decision may have been made to help support funds for grants caught in the crossfire, the policy must continue to be reviewed to ensure its systemic incentives remain aligned with the overall goals of the Grants ecosystem.

The Fraud Tax Estimate for Gitcoin Round 11 was $5,787, approximately 0.6% of the total matching funds of $965,000. In contrast, the fraud tax in Gitcoin Round 10 was $14,400, a total of 2.1% of the total matching funds last round and in Round 9 it was $33,000, or a total of 6.6% of the total matching funds. This appears to be a positive trend that may suggest fraud is being deterred by the efforts of the FDD workgroup, although there are many variables involved so it is difficult to make any hard claims of causality.

While the ML algorithm and FDD process are focused primarily on contributor accounts, the FDD workstream does also monitor grants themselves for fraud and abuse. Human evaluators review grant submissions, updates, complaints and fraud tips to monitor the grant side of the Gitcoin platform.

In addition to contributor accounts and grant reviews, the Fraud Detection & Defense workstream is also working to establish sanctions and appeals processes and support other policymaking for the system. Procedures and precedent are being steadily iterated for these other aspects of fraud detection, to maintain Gitcoin Grants as a fair and credibly neutral funding platform for web3 public goods.

FDD Automation Improvements in Round 11

The FDD workstream continues to look at various layers of these processes to upgrade not only accuracy, but also response times. In this round, BlockScience and the FDD group worked to automate and increase the number of times they ran the algorithm, with human feedback, during the round. The aim is a real-time fraud monitoring of contributors and behaviors of those submitting grants for improved Computer-Aided Governance of the Gitcoin Grants ecosystem. The team improved microservices offerings for the FDD pipeline to call for up-to-date data, for better protection and quicker action in responding to attacks, and plans to continue to iterate these services.

The Road Ahead

BlockScience, along with the Token Engineering and Gitcoin communities, have been working for more than a year to improve fraud detection on the Grants platform. It has simultaneously been the front lines of a Sybil battleground, and an enlightening research initiative in gaming and attacks in digital identity-dependent systems like Quadratic Funding. The process is ongoing and the technical work, along with countless hours from contributors and volunteers, has supported huge improvements in Sybil detection.

In Round 11, the FDD workstream team was able to test more aggressive tuning of the algorithm, capture better accuracy measurements, and improve response times and data calls — exciting and promising results that all of the work is protecting the credible neutrality of the Gitcoin Grants ecosystem!

However, with increasing match amounts up for grabs and while the ML algorithm “learns”, so do the attackers. We have many further iterations and improvements planned for the Sybil detection pipeline and look forward to Gitcoin Round 12!

To stay informed on developments and participate in the FDD workstream, join the conversation in the Anti-Fraud-Sybil channel in Discord, or keep up with the workstream on the forum: gov.gitcoin.co.

Apply to participate as a contributor to GitcoinDAO

Article by Jessica Zartler, with edits & suggestions by Danilo Lessa Bernardineli, Nick Hirannet, Michael Zargham, Jeff Emmett and Charlie Rice.

About BlockScience

BlockScience® is a complex systems engineering, R&D, and analytics firm. Our goal is to combine academic-grade research with advanced mathematical and computational engineering to design safe and resilient socio-technical systems. We provide engineering, design, and analytics services to a wide range of clients, including for-profit, non-profit, academic, and government organizations, and contribute to open-source research and software development.