A Framework for Community-Based Algorithmic Policy Making

This post shares our most recent findings on social and technical behaviors in Gitcoin Grant Round 9, and proposes a framework for community-based policy making in semi-automated systems. These processes need the help of the Gitcoin community to continue testing, iterating, and improving ‘decentralized’ community-based funding methods like Quadratic Funding in Gitcoin Grants.

Gitcoin Grants Round 9 witnessed an exciting uptick in donations from previous rounds, exceeding those rounds with 168,000 donations totaling $1.38MM, with $500k of quadratically matched funds as a cherry on top! In this round, we also saw an increase in suspicious behaviors and the beginnings of what is likely automated collusion and sybil attacks coordinated using bots. In this article, we will introduce a four step framework to identify and prevent these adversarial behaviors, as well as how that framework involves the help of the Gitcoin community.

Broadly, this is a post about system design. As with any blockchain-enabled governance system, there are both social and technical dynamics at play. We are in the early days of policy making in complex human-machine systems, and as an early experiment in community funding mechanisms, we need to build and improve these systems iteratively in terms of both their code and their human-governance processes.

This first section explains the kinds of attacks we saw in Round 9. We dig into a few specific examples of what could be perceived as adversarial behavior, and touch on why it is important to maintain the subjective input of human moderators in the definition, detection, evaluation and sanctioning of these behaviors in the Grants process for an effective ecosystem.

More than any other Round, we’re seeing a huge increase in spammed microdonations to many grants. It has been pointed out by some in the Gitcoin community that this is likely an attempt to maximize exposure to potential future airdrops, which were handed out after Round 8 by several independent organizations looking to reward crypto-philanthropists and increase their token distribution. Since this type of incentive complicates our understanding of the “intent” of these donors from supporting projects they think are important, towards seeking to maximize economic rewards, we may choose to reduce this kind of behavior via the proposed framework for the long term health of the ecosystem.

The system design question here becomes: how do we define, detect, and mitigate adversarial behavior in a Gitcoin Grant round to pursue the goal of funding Ethereum public goods?

A 4-Step Framework to Mitigate Adversarial Behaviors

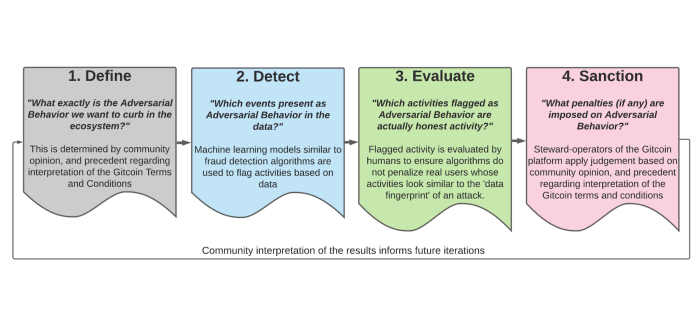

Given the new donation behaviors witnessed in Grants Round 9, the Gitcoin team has been working closely with the data scientists and systems architects at BlockScience and the Token Engineering community to identify replicable processes to separate legitimate grants and donations from spammers and bots. The 4-step framework introduced below is an iterative approach to increasing the sophistication of detection and mitigation of these kinds of unwanted behaviors.

- Define — Determining what behaviors are considered adversarial and inappropriate by the Gitcoin community (not just the Gitcoin team) is our first requirement towards mitigating those behaviors. This is reminiscent of Ostrom’s first principle of “clearly defined boundaries” for acceptable behavior in what could be considered the Gitcoin ‘commons’.

- Detect — Once adversarial behavior has been defined, we then need to set up detection methods to pick up on it when it occurs. Detection methods will include both human and algorithmic moderators, ranging from community-based reporting (“see something, say something”) to data-science based flagging of suspicious grants or contribution patterns.

- Evaluate — After being flagged, we need human evaluation to make the judgement call as to whether this detected offence is a legitimate behavior or deserves sanction. As pointed out in a previous post, what can appear as an attack is often legitimate grants activity, and vice-versa. There is a necessary requirement for human judgement on issues that have been algorithmically flagged. This is part of the human-machine dynamics of a socio-technical system.

- Sanction — Graduated sanctions can be applied as appropriate to confirmed misbehaviors. Again, per Ostrom’s principles, sanctions for certain behaviors need to be determined as commensurate with the violations of the system and / or community norms.

As mentioned, this is a policy-making approach; a set of ideas and steps used as a basis for making decisions about what is and isn’t acceptable behavior in a semi-automated system. The above framework is being expanded upon in other works by the BlockScience team, and builds on existing work on algorithmic policy more generally. Next, we apply this framework to what we are seeing unfold in Gitcoin Round 9.

Convening a Gitcoin Community Council

The Gitcoin team is assembling a council of community-stewards to gather diversified feedback in defining & sanctioning unacceptable behaviors in the Grants ecosystem. Feedback will also be solicited from the wider community via Twitter and Discord. Please reach out there if you are interested in participating in the discussion!

This council process will be coupled and integrated to a semi-supervised machine-learning pipeline to leverage a “cyborg learning” approach towards detecting attackers. (What this means is that we use machine learning to scale the limited capacity of humans to label things).When compared to standard machine learning, this allows full use of both the intellectual and data resources available in the ecosystem. The result is algorithms that are more accurate, transparent, fair and agile to implement, while also being more adaptable to sudden changes and developments.

The intention behind these discussions is to open up the configuration of this attack-mitigation framework to a wider stakeholder community than just the core Gitcoin team. This is part of the plan to progressively decrease centralized control measures in the Gitcoin ecosystem. Appropriate human intervention and strong community engagement is essential to the proper operation of socio-technical systems — not everything needs to be automated away, and what is, should be done so carefully. Public goods systems, such as Gitcoin, are inextricably socio-technical. Many lessons in AI ethics and algorithmic fairness can be gained from Aaron Roth’s The Ethical Algorithm and ongoing work on algorithms in society by some of our research contributors. The goal is transparent, accessible, and tangible policy involving humans in the loop where needed in automated policy systems.

The community council is not a centralized force but rather a coalition of willing community members — the members are by exactly those who have stepped up to donate their time and attention in service of the community. This group administrates a broad albeit rough consensus on definitions and sanctions towards adversarial behavior. The group of Gitcoin community-stewards have been invited by the Gitcoin Team based upon the following criteria:

- Have been active in giving feedback in the past

- Have been active in giving funding in the past

- Have expressed willingness to thoughtfully/constructively push back on Gitcoin policy they don’t believe in.

This process is about engaging in an effective experiment in open-source community funding, under ongoing iteration and improvement, with help from a community of participants that make it meaningful. Next, we’ll walk through the framework step-by-step in further detail. It is worthwhile to note here that by no means do we wish to insinuate that this discovery, development, and improvement process invalidates the outcomes of Gitcoin grants in any way. The process being conducted is as follows:

1. Defining Adversarial Behavior: Collusion, Sybil Attacks & More

Definitions are inherently troublesome, as certain vocabulary means different things across different areas of expertise. There are also assumptions to overcome about the normative basis of what is ‘acceptable’, as the Gitcoin community decides on the subjective basis of these definitions.

As pointed out in a previous article, collusion is tricky to define, since sometimes coordinated but honest behavior can look like an attack. Often, intent is the key difference between acceptable coordination and unacceptable collusion in grant funding. Given the difficulty of measuring the intent of each grant and donation in a graph of tens of thousands of such contributions, instead patterns of behavior that look like collusion must be defined, so that appropriate detection and flagging algorithms can be set to deal with them accordingly at scale.

While we expect all participating communities in the grants ecosystem to coordinate to some degree on funding their grant, we need to ensure that the near-term needs of participants are in line with the long-term sustainability of the Grants ecosystem.

How we define acceptable contribution behaviors needs to involve the Gitcoin community, because you are the biggest stakeholders in the Grants ecosystem, after all. What is and is not acceptable donation behavior to the Ethereum public goods funding community? What role should bots and automation play in the future of the Grants platform? These are questions that will be pondered by the council and wider Gitcoin community.

2. Detecting Adversarial Behavior with Data Science

After defining the types of behavior we want to mitigate in the Gitcoin Grants ecosystem, the next step to mitigating those attack vectors is detecting when they occur. In this step we can use many different tools, from community reporting to algorithms that monitor and flag suspicious grant or donor behaviors.

Let’s look at an example of a suspicious donation pattern, where someone donates less than a dollar to 10 grants, all of which seem likely to give an airdrop. There are a few options we can consider in this scenario:

The donor is a bot — This option presents multiple issues for discussion, since not only is a bot most likely a sybil account, we also need to understand the use of automated donations in the Grants ecosystem, and whether we choose to allow bots to participate at all.

The donor is a sybil account — By creating a multitude of new accounts donating to the same grants, this causes the formula to overweight the matching funds received by the grant. This attack would be outside the rules of the Gitcoin ecosystem and in need of appropriate sanction.

The donor is a real person just trying to get by in the world — Perhaps the donor was truly a real person, who wants to support organic web3 projects who are likely to give airdrops in the future. As a low income individual, perhaps this donor was only able to contribute small amounts to these grants, making them look like a bot attack. Is this not the kind of person whose voice we are trying to protect — even augment — with quadratic funding?

At first pass, many of our detection tactics and algorithms may flag innocent grants and donors as malicious because of their occasionally similar patterns. But this is not a bad thing . Due to the difficulty of defining collusion in the first place, the ecosystem benefits from having aggressive flagging mechanisms to catch potential wrongdoers and rely on appropriate lenience from the judgement of human evaluators. In the next section, we show how this works in practice.

3. Evaluating Flagged Behavior

After detection comes evaluating the flagged grants and donations. Due to the highly subjective nature of evaluating flagged grants, this stage is best handled by humans who can understand the context of a given situation. This group of moderators is tasked to determine whether flagged events go against the Terms & Conditions of the Grants ecosystem, or if they are merely organic communities coordinating in ways that resemble attack vectors (as explored in our previous post).

We must exercise caution in this stage to adhere to the goal of the system. Since the intention of Quadratic Funding is to essentially amplify the voice of financially underrepresented contributors, we must be aware that these contributors could look like bots or attackers via similar data trails: for example, the use of small donations to isolated local projects, or the sharing of devices or accounts.

In the evaluation phase, moderators have a few things to consider. First, they can look at the creators of flagged grants. What seems to be their intention? What other behaviors have they engaged in? Are they asking for a quid pro quo for donations? Does their data-trail suggest they are in a sybil-ring? Second, they can consider the intent of flagged contributors. Are there reasons that a series of small donations could be a legitimate contribution from a well meaning donor, despite the pattern resembling a bot?

At this point in time and due to the sensitivity of the data in discussion, the Gitcoin team can steward the best interests of the Gitcoin ecosystem by evaluating flagged behaviors but their interest in progressive decentralization could provide the opportunity to iteratively include more community input via future mechanisms of the Gitcoin platform. Once the list of flagged transactions have been sorted appropriately, the next stage of the framework is the application of graduated sanctions to confirmed offenders.

4. Determining Appropriate Sanctions

There are a number of ways we can sanction inappropriate actions in the Grants ecosystem. Once again, we need the feedback of the Gitcoin community in determining what are appropriate sanctions for different behaviors. As suggested by Ostrom’s principles for the management of common resources (like the Gitcoin matching pool), ‘graduated sanctions’ should increase in severity based on the seriousness of the infraction. For example, is the offender a bot or a repeat offender?

Some examples of scaled sanctions could be:

- High severity — Removal of grant or donation from the platform. Can be used in the case of clear violation of Gitcoin T&Cs.

- Mid severity — Allow donations to go through, but collapse small / spammy donations into a single account to reduce QF matching impact. Can be used to moderate borderline collusion attempts by reducing the drain on communal matching funds.

- Low severity — Allow donation and matching, but clean contribution data to filter out bot donations & spam attacks from receiving airdrops. Can be used to allow donation and matching, but remove spammy accounts from the potential for future airdrops, to disincentivize this kind of behavior in the future

Sanctions are a necessary step for accountability and recourse, which should be transparently communicated with participants to set clear community boundaries and allow participants to choose their behaviors within the decision-space that is determined as appropriate. Again, this is a subjective and iterative practice. This is where precedents get set, and the definitions of what is appropriate become normalized and becomes internalized to the rules of the system and the norms and behaviors of the community.

The Gitcoin team is coordinating a group of community stewards who will provide key input to the sanction process, to ensure community needs are met with new policy decisions being made moving forward. Ultimately, this input is key to maintaining a well operating, credibly neutral web3 grants platform for funding public goods.

How the Gitcoin Community Can Help!

Computer systems make viable making more sustainable, diverse and efficient human organizations. Even though algorithms are code-based, in the end they consist of human-machine ensembles. This means that policy-making processes which set out how decisions are made, not just in terms of code but also appropriate human involvement, are crucial to the sustainable functioning of the system. This process is in-flight. Below are a few ways you can be involved:

- See something? Say something! Community monitoring for bad behavior is key! If you notice suspicious donations coming to your grant, like lots of small contributions from ppl you don’t know, or large donations cancelled for strange reasons? Log it in the Gitcoin discord grant-help channel or via the @GitcoinDisputes Twitter account.

- Help us to decide appropriate Grants policy using Rawl’s Veil of Ignorance. We need your input to design a Grants policy that is fair to all, regardless of how those decisions directly impact your grants income. Credibly neutrality requires that we set fair policy (definition, detection, evaluation, sanction) that is not biased by results to our individual grant incomes.

Gitcoin will soon be publishing a Round 9 governance brief for community review. All are invited to comment on twitter and in the gov.gitcoin.co forum.

Article by Jeff Emmett, Kelsie Nabben, Danilo Lessa Bernardineli, and Michael Zargham, with thanks to Kevin Owocki, Disruption Joe, and Matthew Carano for key insights and feedback.