Charles Rice with Michael Zargham

We live in wondrous and exciting times, on the edge of a shift no less significant than the personal computing revolution of the 1980s and 90s. The growth and development of open source, purpose-built networks on blockchain protocols creates an unprecedented opportunity to blend theory with practice, and realize whole socio-technical ecosystems from the ground up.

To recap the important points from the previous part of this series:

- Blockchain protocols will become as central to daily life as the semiconductor

- The networks built on those protocols provide an opportunity to design systems like credit that took centuries to evolve in organic networks

- A new discipline, token engineering, is emerging to design and build these networks

In the last article, I described an iterative technology stack connecting semiconductors to air travel. I neglected, however, to describe what that stack looks like.

The truly radical thing about blockchain is that it creates objective reality. It’s not the objective reality — not reality with a capital ‘real’ — but it is the reality that all parties to a transaction have explicitly accepted. It’s an innovation on the level of double-entry bookkeeping in the 15th century. In addition to being an abstract representation, the record of a transaction is also the transaction itself.

In more traditional data settings, like a centrally administered database, we can’t work with data directly and maintain its reliability. We typically have to extract a copy and work from that. A blockchain ledger (or related technology like IPFS or Bigchaindb), secured through cryptography and redundancy, enables data to be used directly while remaining trustworthy. So long as the data remains trustworthy and the computation network secure, smart contracts can be built to govern and influence the behavior of actors in the network.

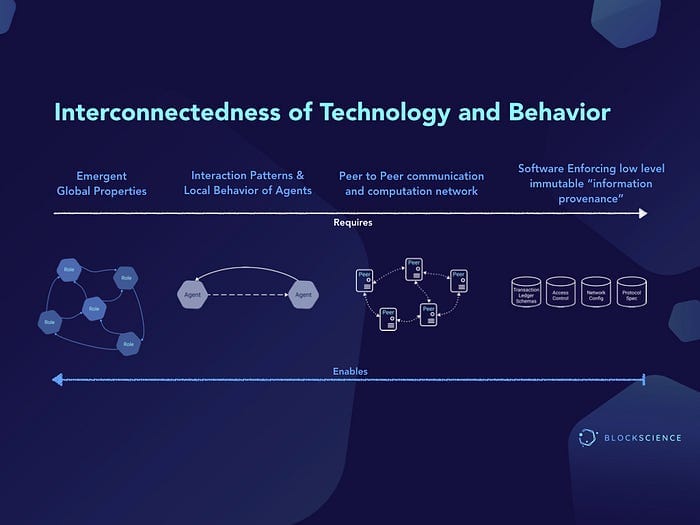

In our semiconductor metaphor, trustworthy data is the semiconductor — the underlying enabling technology. Secured computation is the microchips that harness the semiconductors, and smart contracts take the part of micro-controllers that govern specific systems. The picture below, Figure 1, offers a visual representation of how technology and behavior interconnect and enable each other. Elements from left to right require their immediate neighbor. From right to left, each element enables its neighbor to exist.

As we move on with token engineering proper, keep in mind the base technology stack: the combination of trustworthy data and secure computation enable smart contracts, incentives, and mechanisms (the ‘tokens’) that the token engineer designs and builds.

We’re now going to turn our attention to the ‘engineering’ in ‘token engineering.’ It is used here deliberately, and not just to make the whole phrase sound cool. Using engineering, rather than, say, design or development, places our work into a validated framework. It also provides us with a set of proven methods and tools we can bring to bear on the complex challenges that go into realizing new socio-technical networks.

Take a look at the Wikipedia definition of ‘engineering’:

“[T]he … application of science, mathematical methods, and empirical evidence to the innovation, design, construction, operation and maintenance of structures, machines, materials, devices, systems, processes, and organizations for the benefit of humankind.”

There’s a lot there to unpack. But we’re going to start with the last bit: ‘for the benefit of humankind.’ As mentioned in the previous installment, there are applications on these open networks that mean they could come to manage or control anything from a supply chain to the identities of billions of people in an increasingly digital economy. Given their potential, it’s vitally important that these networks be designed well, and with a deep thought given to the consequences of design decisions.

What is Seen and What is Not Seen

So, how do we really know what is for the benefit of humankind?

The short answer is: we can’t, not in any complete or definitive way. But that doesn’t mean we get to ignore the question. After all, we generally want to make the world better with new technologies, not worse. Instead of looking for a strict ordering of good, we can look for a partial or relative one. For example, it’s easy to argue that suspension bridges have been better for humankind than the electric chair. What about something like nuclear fission, the source of nuclear power and the atomic bomb?

If we only looked at nuclear fission as deaths due to nuclear weapons and reactor catastrophes, you’d most likely conclude humankind was worse. If, instead, we looked at the reductions carbon output and fossil fuels consumption due to increased nuclear power generation, we’d almost certainly say it was better. The question of benefit comes down to choices about definitions, goals, and metrics. Even an objective metric has subjectivity built into the choice and method of measurement.

For the engineers’ part, though, it is not about casting judgment, but rather about being mindful of consequences, intended or not. Let’s take a look at an all-too-relevant example: ridesharing.

Ridesharing apps have radically changed our lives. They have increased mobility for millions of people. They have enabled jobs and pay to countless drivers that might be otherwise unemployed.

At the same time, the proliferation of rideshare app users reversed positive trends in areas where they flourished. Over the prior decade, there had been a general increase in the use of public transportation infrastructure by the financially more fortunate. The sudden abundance of cars and drivers made public transit less attractive and drove a net increase in urban congestion and pollution-per-mile.

Longer term, assuming the trend continues, we should expect to see a reduction in support for public transportation infrastructure spending and increasing social stigma associated with public transit use. How do we study trade-offs like the short term benefit of increased mobility with slow but adverse shifts in behavior?

Systems that involve humans and incentives, whether analog or digital, are inherently complex. They will always involve more consequences than just those built in (e.g., increased congestion in addition to more people being able to get around). As the systems that get built become more central to humanity, it becomes exponentially more important to evaluate those systems as rigorously as we can for those unseen consequences.

In this article, I have tried to demonstrate the need for a rigorous, ethical, and long-sighted approach to building the open networks enabled by blockchain and related technologies. The consequences, both intended and not, have the potential to be huge and come with real human costs. But the opportunity is equally large and should be seized with the right tools. As this series continues, we will take a look at the engineering process and tools, especially as they relate to the broader practice of token engineering.

About BlockScience

BlockScience® is a complex systems engineering, R&D, and analytics firm. Our goal is to combine academic-grade research with advanced mathematical and computational engineering to design safe and resilient socio-technical systems. We provide engineering, design, and analytics services to a wide range of clients, including for-profit, non-profit, academic, and government organizations, and contribute to open-source research and software development.